Abstract:

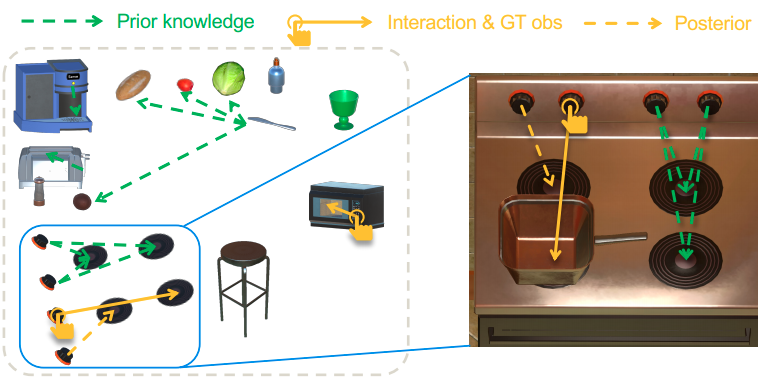

Building embodied intelligent agents that can interact with 3D indoor environments has received increasing research attention in recent years. While most works focus on single-object or agent-object visual functionality and affordances, our work proposes to study a new kind of visual relationship that is also important to perceive and model – inter-object functional relationships (e.g., a switch on the wall turns on or off the light, a remote control operates the TV). Humans often spend little or no effort to infer these relationships, even when entering a new room, by using our strong prior knowledge (e.g., we know that buttons control electrical devices) or using only a few exploratory interactions in cases of uncertainty (e.g., multiple switches and lights in the same room). In this paper, we take the first step in building AI system learning inter-object functional relationships in 3D indoor environments with key technical contributions of modeling prior knowledge by training over large-scale scenes and designing interactive policies for effectively exploring the training scenes and quickly adapting to novel test scenes. We create a new benchmark based on the AI2Thor and PartNet datasets and perform extensive experiments that prove the effectiveness of our proposed method. Results show that our model successfully learns priors and fast-interactive-adaptation strategies for exploring inter-object functional relationships in complex 3D scenes. Several ablation studies further validate the usefulness of each proposed module.

Bibtex:

@InProceedings{li2022ifrexplore,

title = {{IFR-Explore}: Learning Inter-object Functional Relationships in 3D Indoor Scenes},

author = {Li, Qi and Mo, Kaichun and Yang, Yanchao and Zhao, Hang and Guibas, Leonidas},

booktitle = {International Conference on Learning Representations (ICLR)},

year = {2022}

}