Multiview Aggregation for Learning Category-Specific Shape Reconstruction

Abstract

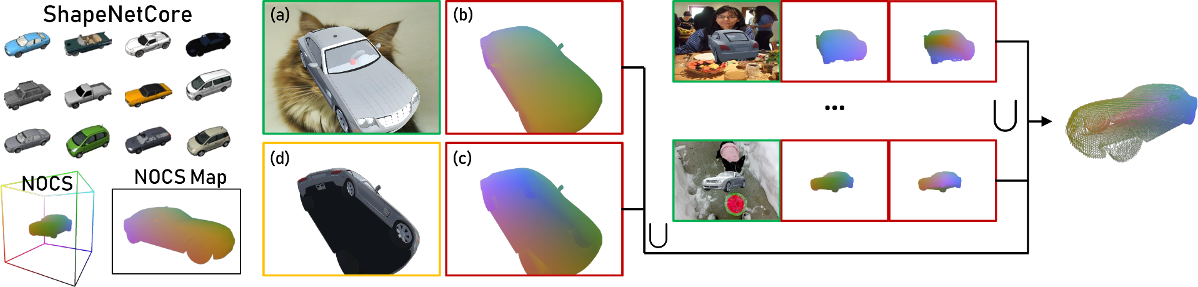

We investigate the problem of learning category-specific 3D shape reconstruction from a variable number of RGB views of previously unobserved object instances. Most approaches for multiview shape reconstruction operate on sparse shape representations, or assume a fixed number of views. We present a method that can estimate dense 3D shape, and aggregate shape across multiple and varying number of input views. Given a single input view of an object instance, we propose a representation that encodes the dense shape of the visible object surface as well as the surface behind line of sight occluded by the visible surface. When multiple input views are available, the shape representation is designed to be aggregated into a single 3D shape using an inexpensive union operation. We train a 2D CNN to learn to predict this representation from a variable number of views (1 or more). We further aggregate multiview information by using permutation equivariant layers that promote order-agnostic view information exchange at the feature level. Experiments show that our approach is able to produce dense 3D reconstructions of objects that improve in quality as more views are added.

Downloads

Citation

@InProceedings{xnocs_sridhar2019,

author = {Sridhar, Srinath and Rempe, Davis and Valentin, Julien and Bouaziz, Sofien and Guibas, Leonidas J.},

title = {Multiview Aggregation for Learning Category-Specific Shape Reconstruction},

booktitle={Advances in Neural Information Processing Systems (NeurIPS)},

year={2019}

}

Acknowledgments

This work was supported by the Google Daydream University Research Program, AWS Machine Learning Awards Program, and the Toyota-Stanford Center for AI Research. We would like to thank Jiahui Lei, the anonymous reviewers, and members of the Guibas Group for useful feedback. Toyota Research Institute ("TRI") provided funds to assist the authors with their research but this article solely reflects the opinions and conclusions of its authors and not TRI or any other Toyota entity.

This page is Zotero translator friendly.