Contact and Human Dynamics from Monocular Video

Abstract

Existing deep models predict 2D and 3D kinematic poses from video that are approximately accurate, but contain visible errors that violate physical constraints, such as feet penetrating the ground and bodies leaning at extreme angles. In this paper, we present a physics-based method for inferring 3D human motion from video sequences that takes initial 2D and 3D pose estimates as input. We first estimate ground contact timings with a novel prediction network which is trained without hand-labeled data. A physics-based trajectory optimization then solves for a physically-plausible motion, based on the inputs. We show this process produces motions that are significantly more realistic than those from purely kinematic methods, substantially improving quantitative measures of both kinematic and dynamic plausibility. We demonstrate our method on character animation and pose estimation tasks on dynamic motions of dancing and sports with complex contact patterns.

Downloads

Citation

@inproceedings{RempeContactDynamics2020,

author={Rempe, Davis and Guibas, Leonidas J. and Hertzmann, Aaron and Russell, Bryan and Villegas, Ruben and Yang, Jimei},

title={Contact and Human Dynamics from Monocular Video},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV)},

year={2020}

}

Related Projects

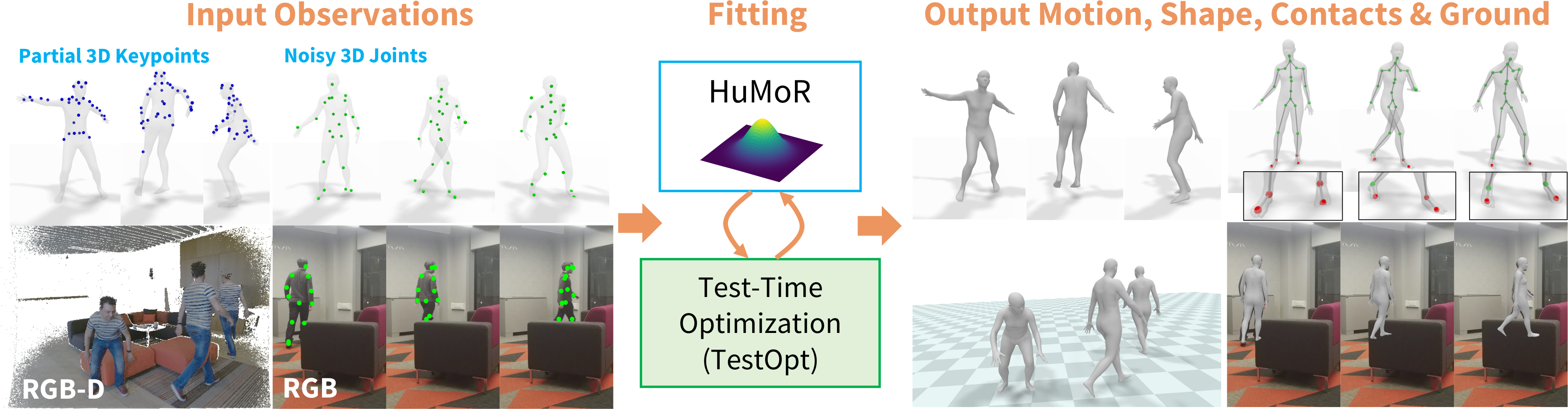

HuMoR: 3D Human Motion Model for Robust Pose Estimation

Davis Rempe, Tolga Birdal, Aaron Hertzmann, Jimei Yang, Srinath Sridhar, Leonidas J. GuibasICCV 2021 (Oral)

Acknowledgments

This work was in part supported by NSF grant IIS-1763268, grants from the Samsung GRO program and the Stanford SAIL Toyota Research Center, and a gift from Adobe Corporation. We thank the following YouTube channels for sharing their videos online: Dance FreaX, Dancercise Studio, Fencer’s Edge, MihranTV, DANCE TUTORIALS, Deepak Tulsyan, Gibson Moraes, and pigmie.